There is a lot of interest around AIOps, with multiple studies finding that organizations are adopting AI at an increasing rate. In a recent Forrester report 68% of companies surveyed have plans to invest in AIOps-enabled monitoring solutions over the next 12 months. Gartner forecasts that by 2022, 40% of all large enterprises will combine big data and machine learning (ML) functionality to support and partially replace monitoring. AIOps has become a major focus of many IT Operations Management (ITOM) organizations in the short and long term.

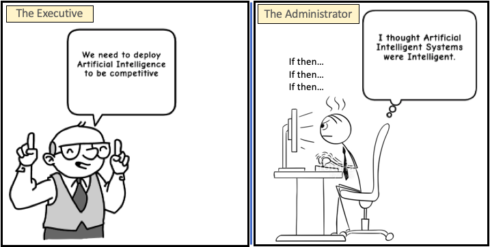

When adopting AIOps into a mature ITOM environment, there are numerous challenges that IT organizations must address. One that frequently emerges is providing context to the ML output. In laymen’s terms, what does the machine learning output mean to the business?

The importance of context

In IT surveillance organizations, context is what allows an agent to take action against an alert that is surfaced. Without context, an agent cannot take a remediation action, instead relegating themselves to a lengthy diagnostic and triage process. Context is an important issue because many of the events presented to agents today are symptoms or false positives. A 2018 study by Digital Enterprise Journal found that more than 70% of the operational data collected by IT organizations is not considered actionable. Without context, the agent has a difficult time determining when and when not to take action.

Some of the most common types of ML algorithms being applied to ITOM are related to clustering and anomaly detection. While clustering algorithms correlate many seemingly disparate events and logs in an effort to reduce noise anomaly detection uncovers behavioral changes in time series data (such as performance metrics) that may not be detected by traditional threshold based monitoring. Despite all the benefits that clustering and anomaly detection provide they do very little to provide context. For example, just because something is anomalous doesn’t mean there is a negative impact to the business. The anomaly may be completely harmless (or not actionable), such as one that occurs during a planned maintenance window. Clustering helps to reduce noise and improve correlation; however, it does not by itself identify critical context such as a service or customer. To obtain context today organizations rely on either manually applying rules or maintaining external data repositories, both of which are inefficient.

A recipe for disaster

A few ways organizations address the context problem include:

- Enriching the ML output with meta data from configuration management databases (CMDB) and/or Inventory Systems

- Supervised learning via manually labeling the output and training the system

- Building static rules that tie the Machine Learning output to something meaningful such as Incident Type, Customer, Service, etc…

Each of these methods have significant drawbacks for companies trying to implement an AIOps solution.

CMDB’s are notoriously difficult to implement and rarely have a complete picture of the infrastructure and service. Gartner estimates that 80% of CMDB projects fail and can take up to three attempts to get it right. Additionally, service management systems typically update their CMDB only daily or weekly. Relying on incomplete and untimely data for a real time AIOps ITOM solution is a recipe for failure.

Manually labeling the machine learning output works well with datasets that do not change frequently but becomes difficult in environments where change is common. This challenge is referred to as concept drift. Concept drift happens when the properties of the target prediction variable change over time. This causes the model to become less accurate as time passes. To account for concept drift in environments where change is constant, like IT Organizations, there must be a rigorous periodic retraining process. This constant manual retraining puts undue burden on IT administration and data science teams. In addition, if retraining is not done properly it erodes confidence in the AIOps platform.

Static rules can provide context but are next to impossible to maintain in a large complex environment. According to a recent Forrester report, 33% of companies are using 20 or more infrastructure and application monitoring tools. Each of these can drive significant alert volumes into operations making building and maintaining static rules untenable.

Solving the context need

To address the context challenge, organizations should consider leveraging a context aware machine learning model. The model should be based on current and historical IT data that represents the operational state of the environment. This can then be coupled with an auto-ML training loop to continually improve the model and minimize concept drift. This then provides a continually updated and accurate ITOM AIOps platform.