Artificial intelligence (AI) and machine learning (ML) are everywhere. From self-driving cars to smart home devices, there is tremendous excitement for the potential of these technologies. However, operational challenges remain. According to research from McKinsey Global Institute, only 20 percent of companies have deployed at least one AI technology and only 10 percent have deployed three or more. Furthermore, out of 160 AI use cases examined, only 12 percent had progressed beyond the experimental stage. But successful early AI adopters report profit margins that are 3 to 15 percent higher than industry average. So, for the 88 percent who have not put ML into production, there is a great deal of untapped potential.

Managing ML (and other forms of AI) in production requires not just mastery of data science and model development, but also the mastery of MLOps, which is the practice of ML application lifecycle management in production. A holistic approach to MLOps is critical to AI business success, and a key component is the health management of ML applications throughout their lifecycle.

Learning What Is Taught

What makes ML applications challenging from a health management perspective? ML pipelines are code, and as such are subject to similar issues as other production software (bugs, etc.). However, the unique nature of ML generates additional health concerns. ML learns and then predicts based on what was learned. Unlike other more deterministic applications (such as databases, etc.), which have a correct answer, there is no a priori correct answer for an ML prediction. An ML prediction is the application of a learned model to new information.

An ML model is only as good as its training. A recent example from MIT shows how an AI program trained on disturbing imagery can see similar images in whatever is presented regardless of context. Training on data with hidden social bias gives rise to models that imitate this behavior when making predictions.

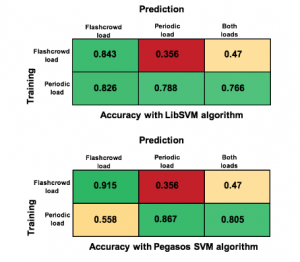

To further illustrate this issue, we describe an artificial experiment where a well-established and classic ML algorithm (Support Vector Machines) is used to predict SLA violations from a publicly available dataset. Figure 1 shows how the algorithm, trained on different workload patterns, behaves when shown other patterns that were not part of its training experience. When incoming traffic looks like training data, the algorithm does well. When the incoming traffic pattern deviates from training data, the algorithm struggles and predictive performance varies.

Figure 1: Accuracy of an ML algorithm when workload pattern changes

While entertaining, these examples also illustrate a key problem. For organizations to reduce risk and optimize ROI for production ML, they need to ensure that ML predictions are of acceptable quality, and monitor/maintain this quality throughout the application lifetime. This ongoing quality management is the ML health portion of MLOps.

ML Health Challenges in Production

Production ML Health is complicated by several factors:

- ML models are only as good as the data that trained them. In production, incoming data is unpredictable and can change both over time and abruptly due to unforeseen events

- During supervised ML training, model efficacy is determined by validation on labeled data for whom the desired answer is known, which is also known as ground truth. Well-established approaches exist for evaluating algorithmic efficacy via ground truth labels. In production, there are no labels. The correct answer is not known at the time of prediction so classic evaluations based on ground truth cannot be used

- Given the above, even traditional software issues (such as bugs, and faulty updates) can be difficult to find and diagnose since outcomes are non- deterministic by default.

More advanced AI techniques (such as Reinforcement Learning, Online Learning, etc.) further exacerbate these diagnostic challenges since these models themselves will change due to unpredictable incoming data, making it even harder to separate legitimate concerns from expected algorithmic behavior.

Left unresolved, these issues can lead to business loss, bias concerns , or even health and life impact, depending on what the ML algorithm is used for.

Managing ML Health within an MLOps Practice

As ML and AI are themselves a nascent technology, so is the technology underlying ML Health. Where deterministic answers are not available, statistical (and in some cases ML) techniques can be used to understand baseline behaviors and detect issues in ML production pipelines. For example:

- Detecting anomalies of ML execution, to determine shifts in incoming data patterns or prediction patterns

- Using A/B tests to compare behavior of multiple ML models

- Using Canary (or Control) pipelines that compare the sophisticated primary algorithm/model with a less sophisticated but established control algorithm

We advocate for ML Health management as a focused part of an MLOps practice, that is as important as deployment, automation and other operational areas. Managing ML Health requires combining traditional practices for application diagnostics (performance monitoring, uptime and error monitoring) with new practices focused on detecting and diagnosing the unique issues of ML applications.