Amazon has announced that it made several improvements to Amazon Simple Queue Service (Amazon SQS) to reduce latency, increase fleet capacity, improve scalability, and reduce power consumption.

Amazon SQS is a messaging queue for microservices, distributed systems, and serverless apps, and it’s been around since 2006.

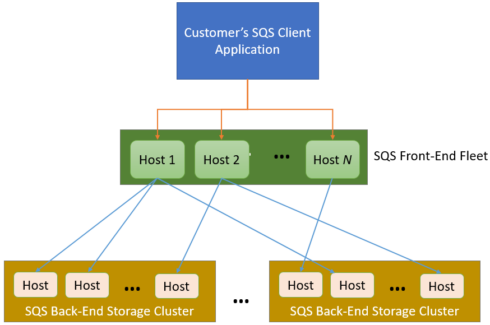

The company is announcing that it improved the connection between the customer front-end and the storage back-end. Originally, it used a connection per request between the services. This means that each front-end was connecting to several hosts, and could sometimes reach a limit based on hardware.

“While it is often possible to simply throw hardware at problems like this and scale out, that’s not always the best way. It simply moves the moment of truth (the “scalability cliff”) into the future and does not make efficient use of resources,” Jeff Barr, chief evangelist for AWS, wrote in a blog post.

A new protocol can process multiple requests and responses in a single connection. It uses 128-bit IDs and checksumming in order to prevent crosstalk, and uses server-side encryption to prevent unauthorized access to data in the queue.

According to Amazon, this new protocol was actually put into place earlier this year and has already processed over 750 trillion requests. It has also reduced dataplane latency by 11% and increased the number of requests that can be handled by SQS hosts by 17.8%.

You may also like…