Mastering Kubernetes health and performance goes beyond the initial setup. The real challenge starts on Day 2, where operations teams must engage in a continuous cycle of monitoring, identifying, and resolving issues before they disrupt operations. This involves addressing two types of issues: critical real-time problems that can lead to immediate downtime, and ongoing reliability risks that if left unaddressed may degrade performance over time.

Given the complexity of modern Kubernetes environments, especially microservices, even with robust monitoring, it can be incredibly difficult to pinpoint the root causes, correlate data effectively, and determine the urgency and correct approach to remediation. Automating this cycle is essential but requires a significant shift from traditional methods of managing Kubernetes health.

Maintaining Kubernetes performance requires continuous attention across workloads, native resources, and the ecosystem of add-ons like CRDs and operators. Beyond incident response, proactive and preventative management demands intelligent monitoring to track real-time metrics and detect anomalies in CPU, memory, and I/O speed. Engineers must fine-tune auto-scaling, manage resource quotas, and optimize configurations to keep performance consistent as workloads scale.

However, monitoring alone isn’t enough. Effective cluster health assessment involves a deep understanding of how Kubernetes components like the API server, etcd, and kubelet interact, especially under failure conditions. Diagnosing critical issues such as memory leaks, container restarts, or CPU throttling requires analyzing logs, tracing events, and setting alert thresholds to distinguish between minor and major incidents.

Common Issues That Impact Kubernetes Health

While it’s imperative to address emergencies and system issues that can bring down an entire cluster, there’s an even greater value in continuously improving your Kubernetes setup to ensure continuous reliability. After resolving immediate problems, platform teams should focus their attention on identifying patterns of failures, optimizing resource usage, and improving the operational readiness of their clusters.

That’s because more subtle, often-overlooked problems can degrade performance and stability over time if neglected. Here’s a checklist of common issues that may not initially seem critical but can cause long-term damage if left unchecked:

- Resource Utilization Inefficiencies

Pods can easily over consume resources such as CPU and memory, leaving the cluster under strain. Setting the right requests and limits is necessary to ensure that resources are used efficiently and that workloads do not starve each other or impact cluster performance. - Pod Eviction and Scheduling Failures

When Kubernetes fails to properly schedule or evict pods, it can result in cascading failures across services. This occurs when nodes are overloaded or there’s insufficient capacity to handle workloads. Regular monitoring of node health and capacity with autoscalers like Karpenter ensures better performance and prevents resource bottlenecks. - Persistent Volume Failures

Persistent storage issues like failed volumes can lead to degraded application performance, data loss, or even service outages.Problems such as FailedAttachVolume or FailedMount can occur when persistent volume claims (PVCs) cannot be properly attached or mounted, disrupting the application’s access to essential data. Proactively addressing these issues helps maintain stability and prevent serious disruptions. - Image Pull Errors

Kubernetes relies on container images to deploy and manage applications. If there are issues with pulling container images—whether due to broken image paths, incorrect versions, or inaccessible image registries—applications may fail to deploy or run. Regular checks on image names, tags, and registry accessibility are essential to prevent deployment failures and ensure reliability. - Outdated Dependencies and Misconfigurations

In fast-moving environments. Outdated or vulnerable dependencies can open up clusters to security risks or compatibility issues. Regularly auditing your cluster for end-of-life (EoL) status and deprecated API versions is needed to maintain security and compatibility.

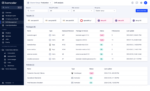

Empowering Developers to Troubleshoot

Given the scale of modern Kubernetes environments, it’s best to delegate some troubleshooting responsibilities to developers, especially application-specific issues. However, this doesn’t mean simply handing off tasks to developers. Instead, it’s about empowering them with the right tools and visibility to understand the state of their applications within Kubernetes.

By giving developers access to the right self-service tools, they can troubleshoot and resolve issues within their applications independently. This also reduces the burden on DevOps and SRE teams, freeing them to focus on maintaining the overall health of the cluster.

Moving Beyond Immediate Fixes

Kubernetes assessments require both high-level checks and detailed issue analysis. Here’s a quick checklist for proactive cluster health management:

- Proactively Mitigate Reliability Risks

Continuously monitor your Kubernetes clusters to identify potential issues such as cascading failures, infrastructure problems affecting workloads, misconfigured workloads causing resource hogging, failed or hanging add-ons with cluster-wide impact, or clusters nearing end-of-life (EoL). Use auto-generated playbooks to handle these risks efficiently. - Avoid Configuration Drift and Maintain Version Consistency

Prevent configuration drift by using deep, contextual visibility tools to monitor and compare configurations across clusters. Identify deviations that could lead to performance or reliability problems. Track release rollouts, detect resource consumption anomalies, and get alerts for any breaking changes or issues, complete with failure analysis and remediation steps. - Enforce Governance and Standards Across the Organization

Minimize security risks and prevent downtime by enforcing strong governance and guardrails throughout your Kubernetes environment. Implement policies to detect and assess policy violations, understand their severity, and evaluate runtime impacts. Integrate seamlessly with policy engines like Open Policy Agent (OPA) and Kyverno to maintain security and compliance.

Maintaining the health of Kubernetes environments requires more than just quick fixes or reactive problem-solving. It demands a continuous, proactive process to ensure long-term stability, performance, and cost-efficiency.